In this video it shows the steps and code to run an LLM (Large Language Model) on your Windows Laptop using Python code.

In this demo it uses the below model from huggingface:

https://huggingface.co/Daviduche03/SmolLM2-Instruct

Steps:

- Download the model from huggingface.co

git clone https://huggingface.co/Daviduche03/SmolLM2-Instruct - Load Python virtual environment

python -m venv llm-env

llm-env\Scripts\activate # For Windows - Install transformers in Python’s virtual environment

pip install torch transformers - Run the Python code

I hope you like this video. For any questions, suggestions or appreciation please contact us at: https://programmerworld.co/contact/ or email at: programmerworld1990@pworld1990

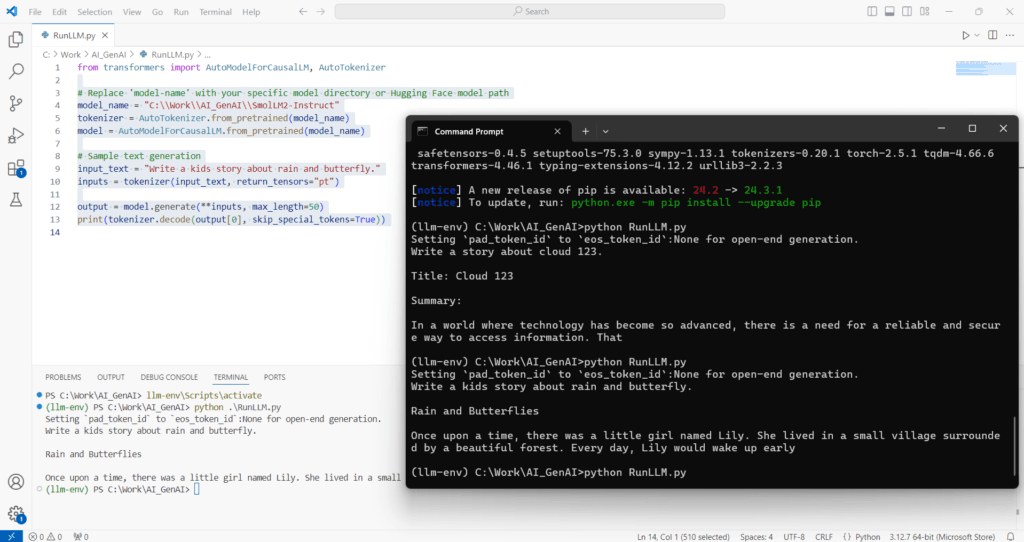

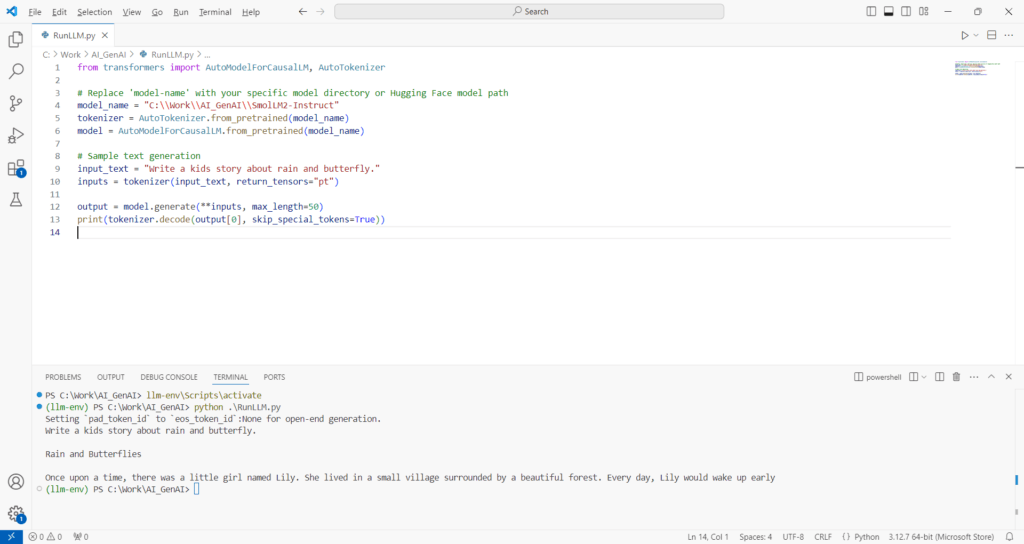

Python code:

from transformers import AutoModelForCausalLM, AutoTokenizer

# Replace 'model-name' with your specific model directory or Hugging Face model path

model_name = "C:\\Work\\AI_GenAI\\SmolLM2-Instruct"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# Sample text generation

input_text = "Write a kids story about rain and butterfly."

inputs = tokenizer(input_text, return_tensors="pt")

output = model.generate(**inputs, max_length=50)

print(tokenizer.decode(output[0], skip_special_tokens=True))

Screenshots:

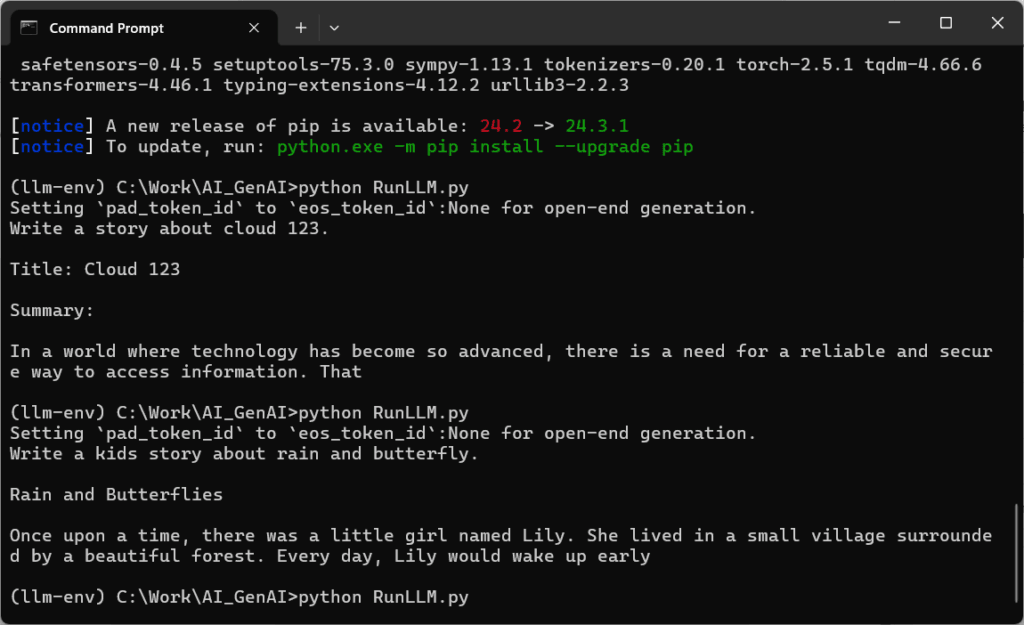

Windows Command Prompt output:

C:\Work\AI_GenAI>git clone https://huggingface.co/Daviduche03/SmolLM2-Instruct

Cloning into 'SmolLM2-Instruct'...

remote: Enumerating objects: 16, done.

remote: Counting objects: 100% (12/12), done.

remote: Compressing objects: 100% (12/12), done.

remote: Total 16 (delta 1), reused 0 (delta 0), pack-reused 4 (from 1)

Unpacking objects: 100% (16/16), 1.13 MiB | 1.47 MiB/s, done.

fatal: active `post-checkout` hook found during `git clone`:

C:/Work/AI_GenAI/SmolLM2-Instruct/.git/hooks/post-checkout

For security reasons, this is disallowed by default.

If this is intentional and the hook should actually be run, please

run the command again with `GIT_CLONE_PROTECTION_ACTIVE=false`

warning: Clone succeeded, but checkout failed.

You can inspect what was checked out with 'git status'

and retry with 'git restore --source=HEAD :/'

C:\Work\AI_GenAI>python -m venv llm-env

C:\Work\AI_GenAI>llm-env\Scripts\activate

(llm-env) C:\Work\AI_GenAI>python RunLLM.py

Traceback (most recent call last):

File "C:\Work\AI_GenAI\RunLLM.py", line 1, in <module>

from transformers import AutoModelForCausalLM, AutoTokenizer

ModuleNotFoundError: No module named 'transformers'

(llm-env) C:\Work\AI_GenAI>pip install torch transformers

Collecting torch

Using cached torch-2.5.1-cp312-cp312-win_amd64.whl.metadata (28 kB)

Collecting transformers

Using cached transformers-4.46.1-py3-none-any.whl.metadata (44 kB)

Collecting filelock (from torch)

Using cached filelock-3.16.1-py3-none-any.whl.metadata (2.9 kB)

Collecting typing-extensions>=4.8.0 (from torch)

Using cached typing_extensions-4.12.2-py3-none-any.whl.metadata (3.0 kB)

Collecting networkx (from torch)

Using cached networkx-3.4.2-py3-none-any.whl.metadata (6.3 kB)

Collecting jinja2 (from torch)

Using cached jinja2-3.1.4-py3-none-any.whl.metadata (2.6 kB)

Collecting fsspec (from torch)

Using cached fsspec-2024.10.0-py3-none-any.whl.metadata (11 kB)

Collecting setuptools (from torch)

Using cached setuptools-75.3.0-py3-none-any.whl.metadata (6.9 kB)

Collecting sympy==1.13.1 (from torch)

Using cached sympy-1.13.1-py3-none-any.whl.metadata (12 kB)

Collecting mpmath<1.4,>=1.1.0 (from sympy==1.13.1->torch)

Using cached mpmath-1.3.0-py3-none-any.whl.metadata (8.6 kB)

Collecting huggingface-hub<1.0,>=0.23.2 (from transformers)

Using cached huggingface_hub-0.26.2-py3-none-any.whl.metadata (13 kB)

Collecting numpy>=1.17 (from transformers)

Using cached numpy-2.1.3-cp312-cp312-win_amd64.whl.metadata (60 kB)

Collecting packaging>=20.0 (from transformers)

Using cached packaging-24.1-py3-none-any.whl.metadata (3.2 kB)

Collecting pyyaml>=5.1 (from transformers)

Using cached PyYAML-6.0.2-cp312-cp312-win_amd64.whl.metadata (2.1 kB)

Collecting regex!=2019.12.17 (from transformers)

Using cached regex-2024.9.11-cp312-cp312-win_amd64.whl.metadata (41 kB)

Collecting requests (from transformers)

Using cached requests-2.32.3-py3-none-any.whl.metadata (4.6 kB)

Collecting safetensors>=0.4.1 (from transformers)

Using cached safetensors-0.4.5-cp312-none-win_amd64.whl.metadata (3.9 kB)

Collecting tokenizers<0.21,>=0.20 (from transformers)

Using cached tokenizers-0.20.1-cp312-none-win_amd64.whl.metadata (6.9 kB)

Collecting tqdm>=4.27 (from transformers)

Using cached tqdm-4.66.6-py3-none-any.whl.metadata (57 kB)

Collecting colorama (from tqdm>=4.27->transformers)

Using cached colorama-0.4.6-py2.py3-none-any.whl.metadata (17 kB)

Collecting MarkupSafe>=2.0 (from jinja2->torch)

Using cached MarkupSafe-3.0.2-cp312-cp312-win_amd64.whl.metadata (4.1 kB)

Collecting charset-normalizer<4,>=2 (from requests->transformers)

Using cached charset_normalizer-3.4.0-cp312-cp312-win_amd64.whl.metadata (34 kB)

Collecting idna<4,>=2.5 (from requests->transformers)

Using cached idna-3.10-py3-none-any.whl.metadata (10 kB)

Collecting urllib3<3,>=1.21.1 (from requests->transformers)

Using cached urllib3-2.2.3-py3-none-any.whl.metadata (6.5 kB)

Collecting certifi>=2017.4.17 (from requests->transformers)

Using cached certifi-2024.8.30-py3-none-any.whl.metadata (2.2 kB)

Using cached torch-2.5.1-cp312-cp312-win_amd64.whl (203.0 MB)

Using cached sympy-1.13.1-py3-none-any.whl (6.2 MB)

Using cached transformers-4.46.1-py3-none-any.whl (10.0 MB)

Using cached huggingface_hub-0.26.2-py3-none-any.whl (447 kB)

Using cached fsspec-2024.10.0-py3-none-any.whl (179 kB)

Using cached numpy-2.1.3-cp312-cp312-win_amd64.whl (12.6 MB)

Using cached packaging-24.1-py3-none-any.whl (53 kB)

Using cached PyYAML-6.0.2-cp312-cp312-win_amd64.whl (156 kB)

Using cached regex-2024.9.11-cp312-cp312-win_amd64.whl (273 kB)

Using cached safetensors-0.4.5-cp312-none-win_amd64.whl (286 kB)

Using cached tokenizers-0.20.1-cp312-none-win_amd64.whl (2.4 MB)

Using cached tqdm-4.66.6-py3-none-any.whl (78 kB)

Using cached typing_extensions-4.12.2-py3-none-any.whl (37 kB)

Using cached filelock-3.16.1-py3-none-any.whl (16 kB)

Using cached jinja2-3.1.4-py3-none-any.whl (133 kB)

Using cached networkx-3.4.2-py3-none-any.whl (1.7 MB)

Using cached requests-2.32.3-py3-none-any.whl (64 kB)

Using cached setuptools-75.3.0-py3-none-any.whl (1.3 MB)

Using cached certifi-2024.8.30-py3-none-any.whl (167 kB)

Using cached charset_normalizer-3.4.0-cp312-cp312-win_amd64.whl (102 kB)

Using cached idna-3.10-py3-none-any.whl (70 kB)

Using cached MarkupSafe-3.0.2-cp312-cp312-win_amd64.whl (15 kB)

Using cached mpmath-1.3.0-py3-none-any.whl (536 kB)

Using cached urllib3-2.2.3-py3-none-any.whl (126 kB)

Using cached colorama-0.4.6-py2.py3-none-any.whl (25 kB)

Installing collected packages: mpmath, urllib3, typing-extensions, sympy, setuptools, safetensors, regex, pyyaml, packaging, numpy, networkx, MarkupSafe, idna, fsspec, filelock, colorama, charset-normalizer, certifi, tqdm, requests, jinja2, torch, huggingface-hub, tokenizers, transformers

Successfully installed MarkupSafe-3.0.2 certifi-2024.8.30 charset-normalizer-3.4.0 colorama-0.4.6 filelock-3.16.1 fsspec-2024.10.0 huggingface-hub-0.26.2 idna-3.10 jinja2-3.1.4 mpmath-1.3.0 networkx-3.4.2 numpy-2.1.3 packaging-24.1 pyyaml-6.0.2 regex-2024.9.11 requests-2.32.3 safetensors-0.4.5 setuptools-75.3.0 sympy-1.13.1 tokenizers-0.20.1 torch-2.5.1 tqdm-4.66.6 transformers-4.46.1 typing-extensions-4.12.2 urllib3-2.2.3

[notice] A new release of pip is available: 24.2 -> 24.3.1

[notice] To update, run: python.exe -m pip install --upgrade pip

(llm-env) C:\Work\AI_GenAI>python RunLLM.py

Setting `pad_token_id` to `eos_token_id`:None for open-end generation.

Write a story about cloud 123.

Title: Cloud 123

Summary:

In a world where technology has become so advanced, there is a need for a reliable and secure way to access information. That

(llm-env) C:\Work\AI_GenAI>python RunLLM.py

Setting `pad_token_id` to `eos_token_id`:None for open-end generation.

Write a kids story about rain and butterfly.

Rain and Butterflies

Once upon a time, there was a little girl named Lily. She lived in a small village surrounded by a beautiful forest. Every day, Lily would wake up early

(llm-env) C:\Work\AI_GenAI>